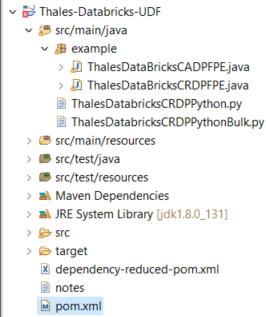

Download and Compile Code Using Github

Clone the repository to your local machine.

git clone https://github.com/ThalesGroup/CipherTrust_Application_Protection.gitNote

This document does not contain all the notebook samples available. For a complete listing please refer to the github.

The database directory has all the code for Databricks.

Ensure that your CipherTrust Manager is configured correctly. This means CipherTrust Manager already configured each of the classes that has a main method which can be used to test locally.

Modify the

udfConfig.propertiesfile values such asCMUSERID,CMPWD, and other necessary settings. For more information onudfConfig.properties, see Configuration File and Environment Variables.

Generate the Jar File to Upload to the Databricks

To compile and generate the target jar file to be uploaded to the Databricks compute platform select the project and choose Run As > maven install to generate the target.

[INFO]

[INFO] --- maven-install-plugin:2.4:install (default-install) @ Thales-Databricks-UDF ---

[INFO] Installing C:\Users\t0185905\workspace\Thales-Databricks-UDF\target\Thales-Databricks-UDF-6.0-SNAPSHOT.jar to C:\Users\t0185905\.m2\repository\Thales\Thales-Databricks-UDF\6.0-SNAPSHOT\Thales-Databricks-UDF-6.0-SNAPSHOT.jar

[INFO] Installing C:\Users\t0185905\workspace\Thales-Databricks-UDF\pom.xml to C:\Users\t0185905\.m2\repository\Thales\Thales-Databricks-UDF\6.0-SNAPSHOT\Thales-Databricks-UDF-6.0-SNAPSHOT.pom

[INFO] Installing C:\Users\t0185905\workspace\Thales-Databricks-UDF\target\Thales-Databricks-UDF-6.0-SNAPSHOT-jar-with-dependencies.jar to C:\Users\t0185905\.m2\repository\Thales\Thales-Databricks-UDF\6.0-SNAPSHOT\Thales-Databricks-UDF-6.0-SNAPSHOT-jar-with-dependencies.jar

[INFO] ------------------------------------------------------------------------

[INFO] BUILD SUCCESS

[INFO] ------------------------------------------------------------------------

[INFO] Total time: 8.482 s

[INFO] Finished at: 2024-10-17T10:28:59-04:00

Configuration File and Environment Variables

The following sample file uses the udfConfig.properties file in the java project resource directory that must be copied to a file location in databricks.

Sample udfConfig.property file:

# udfConfig.properties

CMUSER=apiuser

CMPWD=yorupwde!

returnciphertextforuserwithnokeyaccess=yes

CRDPIP=yourcrdpip

keymetadatalocation=external

keymetadata=1001000

protection_profile=alpha-external

protection_profile_alpha_ext=alpha-external

protection_profile_alpha_int=plain-alpha-internal

protection_profile_nbr_int=plain-nbr-internal

protection_profile_nbr_ext=plain-nbr-ext

showrevealinternalkey=yes

BATCHSIZE=20

CRDPUSER=admin

Listed below are the explanations for the configuration file variables required for the Cloud Function.

| Key | Value | Description |

|---|---|---|

| BATCHSIZE | 200 | Nbr of rows to chunk when using batch mode |

| CRDPIP | yourip | CRDP Container IP if using CRDP |

| keymetadata | 1001000 | policy and key version if using CRDP |

| keymetadatalocation | external | location of metadata if using CRDP (internal,external) |

| protection_profile | plain-nbr-ext | protection profile in CM for CRDP |

| returnciphertextforuserwithnokeyaccess | yes | if user in CM not exist should UDF error out or retur ciphertext |

| showrevealinternalkey | yes | show keymetadata when issuing a protect call (CRDP) |

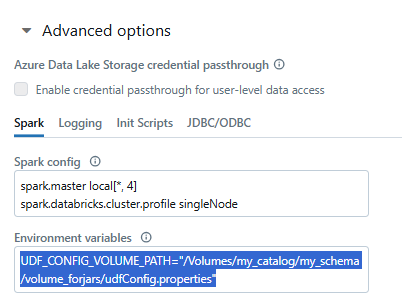

The UDF_CONFIG_VOLUME_PATH Cluster Environment Variable is required for the udfConfig.properies file. It is set in the Advanced Options of the cluster. Ensure to locate it on a Cluster Volume.